TF - IDF Implementation using Python

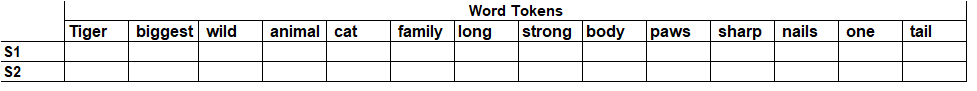

In my last blog we have discussed how we can use TF - IDF method to extract the features of text, refer to - TF - IDF Method . Now we will see how can we implement the TF - IDF concept using python. Let's consider the same three sentences which we have discussed in our last blog to understand TF-IDF implementation in python. Kesri is a good movie to watch with family as it has good stories about India freedom fight. The success of it depends on the performance of the actors and story it has. There are no new movies releasing this month due to corona virus. The first step is to import the necessary libraries to perform the text processing. import pandas as pd from nltk.tokenize import word_tokenize from nltk.corpus import stopwords from sklearn.feature_extraction.text import TfidfVectorizer You must have already noticed that we have imported TfidfVectorizer to extract the text features using TF-IDF. Second step is to store the sentences in the list: documents = ["Kesri is a go...