TF - IDF Implementation using Python

In my last blog we have discussed how we can use TF - IDF method to extract the features of text, refer to - TF - IDF Method. Now we will see how can we implement the TF - IDF concept using python. Let's consider the same three sentences which we have discussed in our last blog to understand TF-IDF implementation in python.

You must have already noticed that we have imported TfidfVectorizer to extract the text features using TF-IDF.

Second step is to store the sentences in the list:

documents = ["Kesri is a good movie to watch with family as it has good stories about India freedom fight.", "The success of it depends on the performance of the actors and story it has.", "There are no new movies releasing this month due to corona virus."]

Third step is to perform the pre-processing of the text.

- Kesri is a good movie to watch with family as it has good stories about India freedom fight.

- The success of it depends on the performance of the actors and story it has.

- There are no new movies releasing this month due to corona virus.

The first step is to import the necessary libraries to perform the text processing.

import pandas as pd

from nltk.tokenize import word_tokenize

from nltk.corpus import stopwords

from sklearn.feature_extraction.text import TfidfVectorizer

import pandas as pd

from nltk.tokenize import word_tokenize

from nltk.corpus import stopwords

from sklearn.feature_extraction.text import TfidfVectorizer

You must have already noticed that we have imported TfidfVectorizer to extract the text features using TF-IDF.

Second step is to store the sentences in the list:

documents = ["Kesri is a good movie to watch with family as it has good stories about India freedom fight.", "The success of it depends on the performance of the actors and story it has.", "There are no new movies releasing this month due to corona virus."]

Third step is to perform the pre-processing of the text.

from nltk.stem.porter import PorterStemmer

stemmer = PorterStemmer()

# add stemming in the preprocess function

def preprocess(sentences):

'changes document to lower case and removes stopwords'

# change sentence to lower case

sentences = sentences.lower()

# tokenize into words

words = word_tokenize(sentences)

# remove stop words

words = [word for word in words if word not in stopwords.words("english")]

# stem

words = [stemmer.stem(word) for word in words]

# join words to make sentence

sentences = " ".join(words)

return sentences

sentences = [preprocess(sentence) for sentence in sentences]

print(sentences)

Now the next step to create the object of TfidfVectorizer and pass the sentences in fit transform method to get the TF - IDF matrix

vectorizer = TfidfVectorizer()

tfidf_model = vectorizer.fit_transform(sentences)

print(tfidf_model)

We will get the following output:

(0, 5) 0.2993851103375717

(0, 6) 0.2993851103375717

(0, 8) 0.2993851103375717

(0, 15) 0.2276900931986287

(0, 4) 0.2993851103375717

(0, 18) 0.2993851103375717

(0, 11) 0.2276900931986287

(0, 7) 0.5987702206751434

(0, 9) 0.2993851103375717

(1, 0) 0.4673509818107163

(1, 13) 0.4673509818107163

(1, 2) 0.4673509818107163

(1, 16) 0.4673509818107163

(1, 15) 0.35543246785041743

(2, 17) 0.3898880096169543

(2, 1) 0.3898880096169543

(2, 3) 0.3898880096169543

(2, 10) 0.3898880096169543

(2, 14) 0.3898880096169543

(2, 12) 0.3898880096169543

(2, 11) 0.29651988085384556

In the tuple above first element represents the row number and second element represents column number of matrix which has the value of TF-IDF ratio.

Now let us convert the model output into the matrix format:

print(tfidf_model.toarray())

We can also convert the above matrix into the data-frame by using below command along with features name as column.

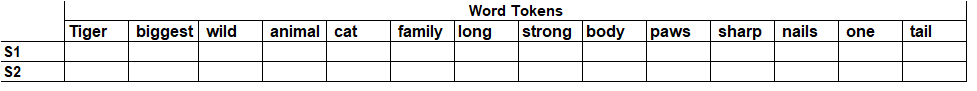

pd.DataFrame(tfidf_model.toarray(), columns = vectorizer.get_feature_names())

Now we will get the following output:

If we look at above TF-IDF matrix, you will find that for first sentence the important term is good, for second sentence the important terms are actor, depend, perform and success.

In this way can find extract the features for documents using TF-IDF method. In case you have any queries or clarifications please put in the comment section and I would love to help you in that.

Please do not forget to follow me on my blog page. Next week we will be discussing the Levenshtein distance or Edit Distance concept in NLP.

Stay safe and healthy.

Comments

Post a Comment