Levenshtein distance concept in NLP

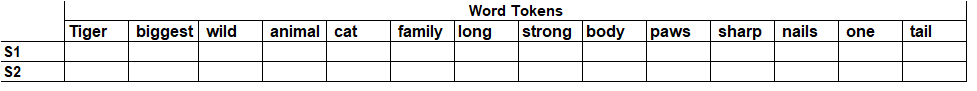

In my last blog we have discussed how we can use TF - IDF method implementation using python for more details refer [ TF - IDF Implementation Using Python ]. In this blog we will discuss how to deal with spelling correction to do the stemming or lemmatization effectively. There is a concept known as "Edit Distance". "Edit distance is the minimum number of edits required to one string to another string". We can perform following types of operations Insertion of a letter Deletion of a letter Modification of a letter Let's take an example to understand this concept in more detail. "success" is written as "sucess". We have two strings one with length 7 [with correct spelling] and another with length 6 [ with incorrect spelling]. Step 1: If the string of length M and N then we need to create the matrix of size (M+1) and (N+1). In our case we will create the matrix of size 7 X 8 as follows. Step 2: Initialize the first row and first column st...