Stemming and Lemmatization

In my last

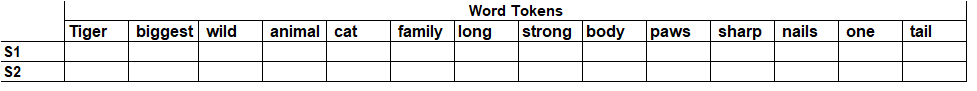

blog we have discussed, bag of words

method for extracting features from text [refer this link Feature

Extraction - Text- Bag of word ]. The drawback of bag of word method

is size of bow matrix due to redundant tokens. if we will use these redundant

tokens in building any machine learning model, it will be inefficient or will

not perform good.

To solve redundant

token problem we can use "Stemming" and "Lemmatization"

Stemming

Stemming technique

makes sure that different variations of word are represented by a single word.

E.g. run, ran, running are represented by the single word "run". So

the process of reducing the inflected forms of word to its root or stem word

is called as Stemming.

|

Root/Stem Word |

Variations of root word |

|

Gain |

Gained, gaining,

gainful |

|

Do |

Do, done, doing, does |

Mr. Martin

Stemmer had developed an algorithm for performing the stemming process to

remove the common morphological endings from the English word. The algorithm is

known as “Porter Stemming”. This

algorithm works on rule based technique. Mr. Stemmer has created a bunch of

rules to reduce the word to its root form in his algorithm. E.g.

|

Rule |

|

Words |

Root form |

|

ED -> ‘ ‘ |

Worked |

Work |

|

|

IES àY |

Denies |

Deny |

|

|

ING -> ‘ ‘ |

Doing |

Do |

|

|

S à ‘ ‘ |

Cats |

Cat |

Still there

are some variations of word which cannot be handled by Porter Stemming

algorithm. E.g. words like Foot and Feet or Teach and Taught or Go and went

etc. cannot be reduced its root form if we will use the above method. To solve

this problem let’s discuss the lemmatization method.

Lemmatization

Here comes another

method for reducing the inflected form of a word to its dictionary form, which

is called as “Lemma” and process is called Lemmatization. This process was

developed based on the English dictionary. This process scans through the dictionary

to reduce the word to its root.

To give you

an example wordnet is the “Lexical” database that can be used to lemmatize the

word. The lemmatization process is computationally expensive as it scans through

the dictionary to find the root form of the word.

So using

above two methods we can solve the problem of redundant tokens and use the data

to create the machine learning models to build spam / ham detector or sentiment

predictor effectively.

In my next

blog we will discuss another method called as “TF-IDF” for converting the text

into matrix form and python implementation of performing the bag-of-word,

stemming and lemmatization.

I hope you learned something today. Stay tuned and get subscribe blog to get the notification. Do not forget to share your thoughts or comments. Stay safe and healthy and let’s keep learning.

Comments

Post a Comment