Power Law or Zipf’s Law: Word Frequency Distribution

This article will help you to understand the basic concepts for lexical processing for text data before using in any machine learning model.

Working with any type of data, be it numeric, textual or images, involves following steps

- Explore : Performing pre-processing of data

- Understand the data

As text is made up of words, sentences and

paragraphs, hence exploring of text data, can be started by analyzing the words

frequency distribution.

Famous

linguist, George Zipf had started a simple exercise:

- Count the number of times each word appear in the document

- Create a rank order on the frequency of each word. The most frequent word was given the rank 1, second most frequent work was given rank 2 and so on.

He repeated this exercise on many documents and

found a specific pattern in which words are distributed in the document.

Basis the pattern observed he has given a principle known as “Zipf Law or Power

Law”

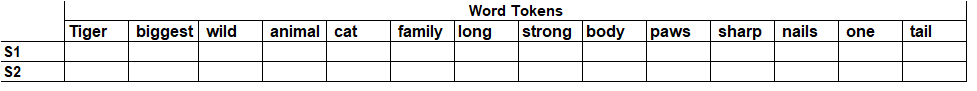

Let’s analyze the word frequencies of following small story:

Once upon a time, there lived a shepherd boy who was bored watching his flock of sheep on the hill. To amuse himself, he shouted, “Wolf! Wolf! The sheep are being chased by the wolf!” The villagers came running to help the boy and save the sheep. They found nothing and the boy just laughed looking at their angry faces.

“Don’t cry ‘wolf’ when there’s no wolf boy!”, they said angrily and left. The boy just laughed at them.

After a while, he got bored and cried ‘wolf!’ again, fooling the villagers a second time. The angry villagers warned the boy a second time and left. The boy continued watching the flock. After a while, he saw a real wolf and cried loudly, “Wolf! Please help! The wolf is chasing the sheep. Help!”

But this time, no one turned up to help. By evening, when the boy didn’t return home, the villagers wondered what happened to him and went up the hill. The boy sat on the hill weeping. “Why didn’t you come when I called out that there was a wolf?” he asked angrily. “The flock is scattered now”, he said.

An old villager approached him and said, “People won’t believe liars even when they tell the truth. We’ll look for your sheep tomorrow morning. Let’s go home now”.

We can clearly

visualize that most frequent words in the paragraphs are words like a,

an, and, he, the, on, to etc. These most frequent words are called “Stop

Words” or “Language Builder Words”.

As stop words do not

necessarily tell us what the document actually represents, they are not

relevant for analysis.

After doing the study

of various documents for word frequency, he found that most relevant words

or most significant words are distributed in well known “Gaussian

Distribution” or “Bell Curve”.

So when we work with

textual data, we remove the stop words from the data.

Now we know that

frequency of stop words are very high therefore removing stop words

result in

- Smaller data in terms of size.

- Less number of features to work with

Basis, above understanding

we can do exploration of textual data and check whether word frequencies

follows normal distribution or not, as most of machine learning

algorithm expects data to be normally distributed.

Kindly share your queries under comment

section that you would like me to address.

Please do subscribe to the blog

Comments

Post a Comment